|

Orcmid's Lair |

status privacy about contact |

||||

|

Welcome to Orcmid's Lair, the playground for family connections, pastimes, and scholarly vocation -- the collected professional and recreational work of Dennis E. Hamilton

Archives

Atom Feed Associated Blogs

Recent Items |

2008-08-30Interoperability: The IE 8.0 Disruption

Technorati Tags: interoperability, web standards, trustworthiness, IE8, usability, web site construction, compatibility I've elected to adopt the IE 8.0 beta 2 release as a tool for checking the compatibility of web and blog pages of mine. I see how disruptive the change to default standards-mode is going to be and how IE 8.0 is going to assist us. I need to dig out tools and resources that will help me mitigate the disruption and end up with standards-compliant pages as the default for new pages. Looking Over IE 8.0 beta 2I avoid beta releases of desk-top software, including operating systems and browsers. Because the standards-mode default of IE 8.0 is going to place significant demands on web sites, I also thought it time to install one copy of IE 8.0 simply to begin assessing all of my web sites and blog pages for being standard-compliant enough to get by. I am willing to risk use of beta-level software in order to be prepared for the official release in this specific case. I'm also sick of having IE 7.0 hang and crash on mundane pages such as my amazon.com logon. I'm hoping that even the beta of IE 8.0 will give me some relief from the IE 7.0 unreliability experience. And so far, so good. With the promotion of beta2 downloading this past week, I took the plunge. Installation was uneventful and all of my settings, add-ins, favorites and history were preserved. My existing home page, default selections, menus and tool bars were also preserved. [I am using Windows XP SP3 on a Windows Media Center PC purchased in September, 2005. IE 8.0 beta 2 also seems faster on this system in all of its modes.] I did not review much of the information available on IE 8.0, expecting to simply try it out.

My first surprise was a change to the address bar. There is a new format where all but the domain name of the URL are grayed. That was distracting for the first few days and it still has me stop and think. I realized this is the point: emphasizing the domain name so that people will tend to check whether they are where they expect to be. I like the idea, even though I have to look carefully and remember the full URL is there when I want to paste it somewhere or share the page on FriendFeed or elsewhere. I take this provision as one of those small details that demonstrates a commitment to safe browsing and confident use of the Internet.

Clicking the button causes it to be shown as depressed and the page is re-rendered as a loosely-standard page with the best-effort presentation and quirks renderings of IE 7.0 and earlier Internet Explorer releases. If you leave the button selected, the setting is remembered and automatically-selected on your next visits to the same domain. It stays that way until you unselect the button by clicking it again while visiting pages of that domain. It was this feature that tipped-me over in wanting to check out my own pages using beta2 (although I thought the button was tracked at the individual page level until I read the description of domain-level setting). By the way, if a page is detected to require a standards or compatibility mode specifically, no compatibility view option button is presented.The amazon.com site is this way from my computer, and so is Vicki's pottery-site home page. I looked at the source of the amazon.com site and confirmed that they are not using the special tag that requests that the compatibility view be automatic. I didn't check the HTTP headers to see if they are using that approach to forcing a compatibility or a standards-mode view. I know I did nothing of the kind on Vicki's site. This suggests to me that there is also some filtering going on in standards-mode rendering to notice whether a compatibility view should be offered. I'm baffled here. I am curious whether there is any browser indication when the compatibility view is selected by a web page tag or HTTP header. I suspect not and I'll have checked into that soon enough. I also checked out the InPrivate browsing feature, which, although popularly dubbed the "porn mode," is very useful when using a browser from a kiosk or Internet cafe and when making private on-line transactions from home. At this point, I am not interested in special features of IE 8.0 other than those related to improving the standards-compliant qualities of web pages and the browsing experience. I may experiment with other features later. My primary objective is to use the facilities of IE 8.0 and accompanying tools to improve the quality and longevity of my web publications. Once I have some mastery over web standards, I will look into accessibility considerations, another project I have been avoiding. Disrupting the State of the WebThe problem that IE 8.0 is intended to help resolve is the abuse of Postel's Law [compatibility view offered] that the web represents: "be conservative in what you do, be liberal in what you accept from others." The abuse arises when what you do is based on what is being accepted, with no idea what it means to be conservative. The web was and is an HTML Wild West and it is very difficult to enforce conservatism (that is, strict standards conformance in web-page creation). Since browsers also varied in what they accepted and then what they did with it, loosely-standard pages and loosely-standard browsers have been the norm and web pages are crafted to match up with the actual response of popular browsers. Since Internet Explorer is made the heavy in this story, we now get to see the price of changing over to "be strict in what is accepted and be standard in what is done with it." This is a very disruptive change. We'll see how well it works. Joe Gregorio argues that exceptions to Postel's Law are appropriate. Some, like Joel Spolski [no compatibility view], think it might be a little too late. There are already some who claim that the IE 8.0 Compatibility view is a sin against standardization [compatibility view offered], no matter that not many of the 8 billion and climbing pages out there are going to be made strictly-conformant any time soon. With regard to compatibility mode, I think it is foolish for it not to be there and Mary-Jo Foley is correct to wonder how much complainers are grasping at straws. It was surprising to me to observe how regularly the compatibility-view option button appears and how terribly much of my material renders in IE 8.0's standards mode. Apparently the button is there because IE 8.0 can't tell whether the page is really meant to be rendered via standards-mode or is actually a loosely-implemented page. I'm spending a fair amount of time toggling back and forth to see if there is any difference on sites I visit. This suggests to me that there is going to be a rude awakening everywhere real soon now. It is also clear to me that I don't fully understand exactly how this works, and I need to find a way to test the explanation on the IE blog and the discrepancies I notice, especially when the compatibility-view option is not offered and I know nothing special was done to accomplish that on the web page I am visiting. I am also getting conflicting advice when I use an on-line web-page validator. This change-over to unforgiving, default-standards-mode browsers is going to be very disruptive for the Internet. In many cases, especially for older, not-actively-maintained material, the compatibility view is the only way to continue to access the material successfully. There is a great deal of material for which it is either too expensive or flatly inappropriate to re-format for compatible rendering using strictly-standard features. Without compatibility view, I don't think a transition to standards mode could be possible. The feature strikes me as a brilliant approach to a very sticky situation. Although there is a way to identify individual pages as being loosely-standard and intended for automatic compatibility view, that still means the pages have to be touched and replaced, even to add one line to the <head> element of the HTML page. There are billions of pages that may require that treatment. Perhaps many of them will be adjusted. That will take time. Meanwhile, having the compatibility-view option and its automatic presentation is very important. There is also a way to adjust a web server to provide HTML headers that request a compatibility (or standards-mode only) view of all pages from a given domain. That strikes me as a desperate option to be used only when there is no intention of repairing pages of the site. I might do that temporarily, but only while I am preparing for a more-constructive solution that doesn't depend on compatibility view being supported into the indefinite future. The variations on the available forms of control (browser mode, DOCTYPE, HTTP header, and meta-tag) need to be studied carefully. I expect there to be confusion for a while, probably because I am feeling confused with the ambiguities in my experience so far. Another problem, especially with regard to IE 8.0 beta2, is that we don't reliably know how badly a loosely-standard page will render with a final standards-mode browser versus the terrible standards-mode rendering that beta2 sometimes makes at this time. It is conceivable that the degradation might not be quite so bad as it appears in beta2, but there is no way to tell just yet. The need for expertise and facility with semi-automated tools as part of preserving sites with standards-conforming web pages is probably a short-term business opportunity. The web sites that may be able to make the transition most easily may be those like Wikipedia, where the pages are generated from non-HTML source material. (That makes it surprising that Wikipedia pages currently provoke compatibility buttons and compatibility view is needed to do simple things like be able to follow links in an article's outline.) Mitigating IE 8.0To mitigate the impact of IE 8.0 becoming heavily used, it is necessary to find ways to do the least that can possibly work at once, and then to apply that same attitude in making the next most-useful change, and so on, until the desired mix of standards-compliant and loosely-compliant pages is achieved. To find out what tools are available along with IE8 beta 2, these pages provide some great guidance and resources:

That should point you to all of the resources you need to understand how to check sites, how to use the compatibility provisions, and other ways to take advantage of IE8 availability when it exits beta. I'm looking at a progression that will allow the following:

I will work out my own approach on Professor von Clueless, since I have definitely blundered my way into this. This post is also being used to identify the IE8 mitigation required for this blog, along with some other improvements:

When I update the template to force compatibility with the current loosely-standard blog-page generation, this post will reflect that too. [update 2008-08-30T16:42Z I had a few clumsy bits to clean up, taking the opportunity to elaborate further in some areas. The disruption with standards-mode web browsing is a great lesson for standards-based document-processing systems and office-suite migrations toward document interoperability. I'm going to pay attention to that from the perspective of the Harmony Principles too.] Labels: confirmable experience, IE8.0 mitigation, interoperability, trustworthiness, web site construction, web standards Comments: just make it simpler: use Firefox you have better things to do that support poor browsers that never took standards serious. My concern is not about choice of browser, it is about the level at which my web-site and blog pages are standards-conformant and will render properly with a standards-conformant browsers. It happens that the IE 8 beta 2 compatibility-view option is giving me a way to confine my incompatibilities and then remove them as browsers all become standards-compliant together. 2008-08-14Golden Geek: Executives and Malcontents

Technorati Tags: orcmid, cybersmith, Golden Geek, 1960s, managing developers, software development, Sperry Univac

Shortly after the East-coast software-development operations of Sperry Univac were consolidated in Blue Bell, Pennsylvania, we began hiring new college graduates and putting them through systems-programmer training (what we would now think of as operating-system and programming-languages and tools software development). There were not yet many established computer-science undergraduate programs and we needed to provide some common foundation and basics for developers in our world. We also accepted trainees from within the company, including one engineering draftsman and a number of administrative assistants (then known as secretaries). I don't recall any computer operators or field computer-service types, although we did recruit promising candidates from those areas. I was one of the first instructors when this started around 1966. I also became the lead for a small group of advanced-software development technologists (harboring one of the first efforts to build a non-LISP functional-programming system in the United States). The software organization (remarkably small by today's standards) that these newcomers inoculated became youthful, rambunctious, and energetic. It was a time of enthusiastic growth. Dave: Brilliant Malcontent HackerOne of the developers that I brought into my team, Dave B., had been miss-hired. Although he came in along with a Summer crop of new-graduate hires, he was an experienced developer and drop-out. His experience showed. And Dave was seriously underpaid. I suspect, when he was first hired, he was looking at more money than he'd ever received before. It was also less than what the new-graduate limited-experience hires were making. It didn't take Dave long to figure that out. The problem, of course, is that once you are in the system it is very difficult to break out of the annual merit-pay gradual-increase system. Dave was also a bit iconoclastic with a seasoning of malcontent. That's probably how I was so easily able to add him to my group. He was also an important, helpful contributor. One of his achievements was to develop a braille-printing output converter so that our first blind programmer could obtain listings and tests that were readable as Braille from the back side of the fan-fold sheets. At some point, Dave's review came up and I put him in for the correction that he claimed was merited. The adjustment was declined, of course. The next step was to find a way to appeal it. Dave had to do the real work but I was able to add my support and recommendation. Don: Decisive ExecutiveThe appeal authority was Engineering Division Vice-President Don N., someone who only recently had the Systems Programming group brought under his wing. I was several levels below Don and we had never met one-on-one. Dave was given an appointment with the V-P. That same day I received a phone call from Don saying he was about to meet with Dave and he had just one question: "Was Dave worth it?" I said yes. Don told me what he was going to do. That's probably the shortest, most-decisive conversations I've ever had. Later, Dave provided his account. Don talked with Dave, listened to his concerns about his situation and I'm sure a little about the company and how we operated. Then Don made his offer. Don would approve the special pay increase and change of grade under the condition that Dave would stay with the organization for the next 18 months. That was it. Dave needed to think about it. What he did instead was leave the company, ultimately starting his own small software consulting company in his home town. Lessons: Resolution and Confronting LifeThere were two lessons for me. First, was the great example of executive decisiveness. The Vice President accepted my judgment as the direct manager and advocate for Dave. His offer was completely straightforward. Secondly, how Dave was offered a clear resolution to his salary concerns. What that unconcealed was that Dave's dissatisfaction was about more than salary. Although Dave and I have been out of touch for 30 years, I now wonder if he appreciates the gift that Don made to him. It is not unusual for people early in their career to decide that they path they are on doesn't work for them. They may fault the world or their dissatisfaction may be something that drives them to realize that they crave a different path. I think of Dave as having accomplished that. I also think that happened for others who were still footloose and chose to stop programming and teach disadvantaged children or finish college and graduate school before moving into a different career. There are also people who entered the system-programming group at that time and stayed on, retiring from what became Unisys. Others left and returned, some more than once. The Greater LessonA third lesson took me more time and many installments to appreciate. I fit the pattern of the successful malcontent, just like Dave. I've since learned how powerful it is to see the world as already perfect. Then it does not need to be fixed and certainly not complained about. That leaves only simple questions: what do I stand for, what am i committed to, and what's next? While recalling Dave's experience this morning, I saw this nice reminder from Leo Babauta that offers access to freedom for malcontents. It does not weaken the useful challenge to "change the world or go home." I don't write these reminiscences in any particular order even though I have a progression in mind. Sometimes, such as today, something triggers a recollection that I want to pass on at once. There will be more like this. Comments: Post a Comment 2008-08-13Social Computing: My Graphs over Our Grid?

Technorati Tags: open platform, social grid, social computing, orcmid, Eric Norlin, interoperability, Defrag, open systems, Stowe Boyd, Live Mesh, Windows Home Server Eric Norlin applauds, "From the 'web of pages' to the 'web of flow'":

The applause is for Stowe Boyd's August 5 piece, Blogging 2.0 Doesn't Go Far Enough. That rich piece touches on a variety of topics and envisions a new kind of blogging (i.e., participation) tool. I'm not that enthralled with the amount of freight micro-posts have to carry (usage rights, for example), but I am enamored of a mechanism that allows a coordinated view of the current streams brokered by Twitter, Facebook, FriendFeed, and all of the other tools that straddle contribution and comment and fumble at making digestible discussion. This is not a new problem, but amping up the Internet has made it acutely noticeable. My cherry-picking of Stowe Boyd's appraisal:

I also want tools that recover conversations from the cataracts of utterance, engaging in them at liberty and preserving a tangible trace.

There are more specifics. I am still pondering the generalities. I'm still pondering whether we might already have the bits of an infrastructure for participating in the disjointed flood of loosely-coupled utterances that is becoming the Web. Our pages and feeds become rafts and life-lines for each of us to claim our coherent presence. For the nuts and bolts, I wonder about open grids (where Live Mesh might be the archetypical enabler) and self-hosted presence hubs (where the customizable Windows Home Server may be an instance). At some point, I am going to have to dig in deeper than mere wondering. This will doubtless arise as I am whipsawed by the transition to fully-64-bit platforms. Comments: Post a Comment 2008-08-03Microsoft ODF Interoperability Workshop

Technorati Tags: interoperability, Microsoft, OpenDocument Format, Microsoft Office 2007, ODF-OOXML Harmonization, ODF, OOXML, Document Interoperability Initiative

On Wednesday, July 30, a full-day workshop explored Microsoft's approach to adding Open Document Format support directly into Office 2007. Microsoft's first built-in support will arrive with the Office 2007 SP2 service pack expected mid-2009-ish. Attendees kicked the tires on the current pre-beta implementation (well before initial-beta availability sometime this-year-ish). The workshop provided interaction with the ODF community on technical approach, the challenges being faced, and the balancing act that Office-ODF interoperability requires. By the first break, observing all of the lively conversations among the attendees, I concluded that the meeting was already a success. The additional sessions and the evening dinner reinforced that conclusion. I saw no down side. In addition to providing a face to the Microsoft Office developers working on ODF implementation, the meeting provided face-to-face acquaintance among people who had only known each other through their presence on the web. That was a special reward for me. Here are my impressions.

I am cherry-picking aspects of the workshop that fall in my areas of concern. This is out of balance with the full range of topics and the discussion. I invite your consultation of additional posts on the overall event. [update 2008-08-06T17:31Z There are a few other posts added to the list of links, with adjustments of the text where availability of the other information is pertinent. I corrected a couple of grammar slips at the same time.] 1. ContextOn May 21, 2008, Microsoft announced initial built-in support of OpenDocument Format as part of the 2009 release of the Microsoft Office 2007 SP2 service pack. Some interpreted this as favoring ODF over the updating of existing OOXML provisions to align with differences introduced in IS 29500, changes not expected until the next version of Microsoft Office. [In my reality, inherent limitations of the current ODF specifications prevent anything close to parity with the already-substantial IS 29500 support in Office 2003-2007 until a future version of Office. Support for evolution of ODF (standards and implementations), of OOXML (standards and implementations), and of down-up-level compatibility in the same integrated office-productivity suites will involve some fascinating and instructive evolution of ODF and OOXML support under the same roof.] One month later (June 22, 2008), OASIS Open Document TC members were invited to the July workshop by their new member, Microsoft's Doug Mahugh. After ensuring that all ODF TC members who desired to come had a place, Mahugh issued a general invitation on July 9. The July 23 welcome kit provided the essential parameters:

Along with briefings and hands-on usage, the agenda included three full-group roundtable discussions on topics that Microsoft was grappling with. A fourth would be added, along with free-wheeling discussions held throughout the day. Emphasis on this being the first such workshop and absence of non-disclosure agreements are heartening indications that a serious, open conversation is beginning. 2. General Approach to Document Interoperability for Microsoft OfficeFollowing the initial welcome, Paul Lorimer (Group Program Manager, Office Interoperability) sketched his organization's responsibility for standards engagement across Microsoft Office, including Doug Mahugh's participation at the ODF TC and other standards bodies. Lorimer pointed to the significant amounts of interoperability documentation, including for the binary formats and Office-related protocols, that have been produced. Lorimer sketched the progression of the work from the start of aggressive licensing in 2003 to the Interoperability Principles and Document Interoperability Initiative of 2008. For ODF specifically, Peter Amstein (Development Manager for Microsoft Word and ODF-implementation architect for Office) described the five guiding principles that govern ODF support in Word, Excel, and PowerPoint. These set the priorities that apply in making trade-offs:

Along with this prioritization, there is balancing of different interests: standards groups, corporations, institutions, government agencies, regulatory bodies, and general users. Doug Mahugh explained that ODF 1.1 (instead of the ISO 26300 standard for ODF 1.0) is chosen because of the accessibility additions and because current non-beta implementations are overwhelmingly for ODF 1.1. Amstein added what I take as another important reason for starting at this level: where ODF 1.1 is ambiguous or incomplete, the Office implementation can be guided by current practice in OpenOffice.org, mainly, and other implementations including KOffice and AbiWord. Peter Amstein and the Microsoft Office team are reluctant to make liberal use of extension mechanisms, even though provided in ODF 1.1. They want to avoid all appearance of an embrace-extend attempt. 3. The Devil Is in the DetailsBalancing of competing considerations is not trivial. To illustrate the tensions involved, there's a significant challenge for Excel support of ODF spreadsheet documents: there is no way to incorporate spreadsheet formulas in ODF files without relying on an extension mechanism. It is, of course, not a meaningful option to omit support for spreadsheet formulas in ODF spreadsheet documents. All ODF-conformant spreadsheet implementations, including that of OpenOffice.org, must use extension mechanisms to implement their particular spreadsheet formulas. That is the common practice in this case. Accordingly, Excel uses formula extension to preserve OOXML-defined Excel formulas in ODF spreadsheets. Excel identifies the formulas as employing its extension and accepts them back from ODF input files. In the pre-beta implementation, Excel drops any formulas based on different "foreign" extensions. I presume the current thinking is to eventually converge on OpenFormula rather than attempt to map to any other foreign extensions, even OO.o's, in the interim. The irony is that current OpenOffice.org implementations fail to check whether a formula conforms to its own extension or not. OpenOffice will inadvertently but successfully accept some formulas produced by Excel's ODF implementation as if they are OO.o's. [Afterthought: This happenstance is a doubtful blessing in terms of the potential for user confusion and it may fail the predictability criterion even though this is not Microsoft's problem to solve.] When an Excel formula is successfully "OOo-injected" this way, it will be saved with identification as an OO.o-extension formula and Excel will ignore that unrecognized ODF extension on return in an ODF. [update: Florian Reuter has posted a defect report to OpenOffice.org based on this and other information from the workshop.] This provisional, hopefully-interim Excel-formula approach is an extreme case of extension conflicts; it didn't arouse much concern at the workshop. There were other situations where the Office team takes the opposite approach and avoids extensions. This triggered greater concern from some participants. 4. Impact of the Office Processing Model

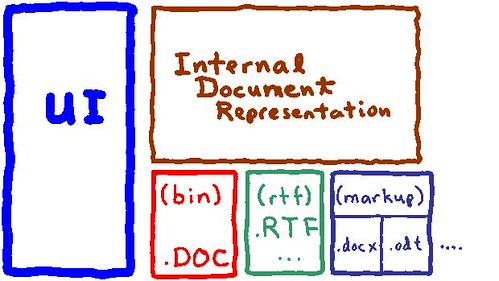

In my unofficial even-simpler version (fig.1), the central feature of typical document processing software is the internal, in-memory representation of the software's document model. The User Interface (UI) provides the creation, manipulation, and viewing/presentation features that the human operator observes and controls. It is important to recognize that all of the user-interactions with the document features involve the internal document representation. It is that representation that supports the UI-selectable features and the visible results that are displayed. At a lower level there are operations that can be selected by the user for transfer between the document representation and external elements (printers, scanners, and persistent storage, typically). In the diagram, I've featured those document-processing services that transfer between internal representation and persistent storage in a variety of formats. Depending on constraints of the internal document representation and the strength of the architectural boundary for import-export of persistent forms, the internal document representation might not reflect anything about the persistent forms that are supported. Architecturally, this is a widely-used approach to maintaining editing performance and isolating file-format treatment in document load and save operations. Topics I Didn't Think to Raise. I didn't notice the implications of this for ODF interoperability in Office (and OOXML interoperability elsewhere) until ruminating the next day. The problem arises as soon as there are multiple formats that must be coherently supported in a single implementation. If UI features work against the internal document representation, they may have little capacity for reflecting differences in capabilities with respect to the persistent formats that are accommodated. Typically, disparities between the internal representation's capabilities and the features of persistent forms are resolvable only when there is a transfer (either input or output) between the internal document representation and an external representation. The first impact is that features may be lost on input. The second impact is that features may be lost on output. This seems straightforward until we realize that the user may rely on features that succeed with the internal representation, only to have them be degraded or lost entirely in the chosen external representation. You can use all of the software's features while editing and end up losing some of them when saving the document. An added complication is that users might not need to commit to any particular external representation before editing. It is not unusual to save a single internal document in more than one format, from crudely-formatted plain-text to HTML to whatever the richest "native" external format is. To the extent that an office-processing model maintains internal ignorance of the external formats, there may be difficult-to-mitigate user-experience consequences.

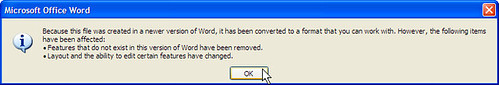

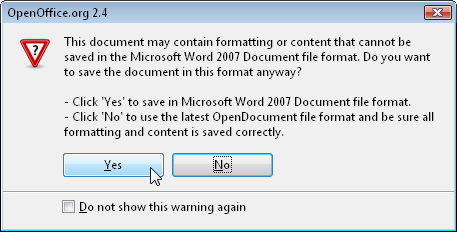

Speaking from Ignorance. I have no knowledge of Microsoft Office System internals. I can't speculate what the specific limitations might be, if any. However, as we move toward increased interoperability where multiple productivity-software formats are supported as fully as possible in the same product, only learning about degradation at input and output will become unhelpful (fig.2). This consideration applies not just to Microsoft Office; it applies to all products that rely on a similar separation of concerns and have an integrated internal document representation. It will be challenging to smooth out movement among the formats without discouraging and alienating users. [I don't believe that limiting users to a greatest-common-denominator internal representation is an option for mainstream productivity suites, even though I advocate exploring that option for cases where interoperable fidelity trumps all else.] More Flavors of Import/Export. We did not dig into the model at the workshop. After providing a basic sketch of the overall model, Peter Amstein continued with an explanation of the different ways external formats are employed in the main Office 2007 applications (fig.1):

There were brief examples applying guiding principles to a number of specific cases other than those I've identified here. [update: Doug Mahugh has provided an extended account of the principles and of the Model-View-Controller processing model.] 5. Demonstrations and DiscussionsHere are a few highlights. Jesper Lund Stocholm has provided more information in his sketch of the proposed approaches and the roundtable discussion. 5.1 Microsoft Word 2007 ODF SupportMicrosoft Word Program Manager Amani Ahmed provided a quick demonstration of the ODF support for Word documents in its pre-beta form. The first example involved opening of the ODF 1.1 specification, the ODF version, in Microsoft Word. The document opened directly and relatively quickly (especially in comparison with current translator plug-in solutions). Ahmed added text to the title page and saved the modified document. Opening the document in OpenOffice.org Writer, she scrolled around enough to demonstrate that her editing of the document had been fully preserved when saved back out to ODF by Word. (A sharp-eyed observer noticed that the saved document was noticeably but not frightfully larger than the original. The difference is apparently related to how the ODF styles are mapped into the Word 2007 internal representation and then brought out again in the pre-beta implementation.) The second example consisted of a richly-formatted .docx with a number of interesting features, including defined value-entry fields. This document also transferred to ODF with preservation of its features. Different Strokes for Different Folks. Chatting before the start of the workshop, ODF TC Editor Patrick Durusau reported that he often takes advantage of OpenOffice.org's ability to open Office binary-format documents. Durusau finds the OO.o interface leaner, more intuitive, and more appealing to use. We had been commiserating on being lost in the Word 2007 UI and not being adept enough to know the still-working keyboard shortcuts. Watching Ahmed use Word 2007 to navigate around the ODF specification, I realized that Word 2007 is a better viewer for my specification-review work. Using OpenOffice.org and the new Adobe Reader 9, I am frustrated by being able to follow cross-references and table-of-content links and not being able to backtrack (although I just now found and enabled the backtrack option in Reader 9). The document map, thumbnails, and screen-reading views of Word 2007 are what I have overlooked as aides to my specification-review efforts. Acknowledging that I may simply have failed to find the desired features in different products, it is encouraging that multiple implementations for standard formats will also expand opportunities for people to match their ways-of-working and, in particular, their individual ways of discovering the available functionality. 5.2 Microsoft Excel 2007 ODF SupportMicrosoft Excel Program Manager Eric Patterson started with an .xlsx document that was saved as an ODF .ods spreadsheet document. Opening in OpenOffice.org Calc preserved a variety of features but not all conditional formatting cases. A simple formula transferred correctly (by accident) and the returned formula was dropped by Excel, as already discussed (section 3). The current proposal is to allow all Excel capabilities to be used with the internal representation even though saving the document as ODF will lose some of the features. The thinking is that a number of the special formatting and presentation capabilities in Excel 2007 are valuable to have available even though they are not preserved in the saved ODF spreadsheet. I suppose this would be useful when preparing a printed document or when keeping it privately in .xlsx and circulating the ODF otherwise. I'm not so sure about such niceties if they have to be re-introduced each time the ODF version returns or is re-opened. [Afterthought: Another way to have more preservation of some advanced formatting and logic would be to paste or embed the richer Excel version into an OpenOffice.org-produced ODF document. But such cases are are already available without requiring ODF support in Office 2007. Added 2008-08-06: Oddly, the OLE degradation cases (missing application, missing linked file, missing OLE support) work more smoothly and round-trip restoration of original functionality works too.] 5.3 Microsoft PowerPoint 2007 ODF SupportMicrosoft PowerPoint Program Manager Alan Huang demonstrated two interesting aspects to the saving of PowerPoint 2007 presentations as ODF presentations. His example included two-level master hierarchy (themes to masters to instances), transitions, tables, slide notes, and changed templates on some slides. The presentation appearance was preserved very well, although the master hierarchy is lost in the ODF and tables lose their "tableness." There are mismatches in coloring, gradients, and some layout-preservation bugs are being looked into. This is one of the places where pixel-for-pixel fidelity can be expected, and where, as John Head ably advocated, users likely won't care about standards if conformance prevents that. The cases are complicated, especially where Microsoft Office features don't have a safe representation in ODF and where ODF features don't map well into the Office model. I imagine there are also concerns about self-compatibility as the support for ODF evolves with future releases. 5.4 Office Graphics Support in ODFMegan Bates, working on shared graphics and objects across the Office suite, demonstrated how graphics are being mapped to and from ODF. Some of the features that are new in Office 2007 do not map well into ODF. In some cases, it is proposed that loss of shape and color fidelity be tolerated in order to preserve editability in ODF applications. These graphics arise in Word, Excel, and PowerPoint documents and feature discrepancies will be apparent to those who rely heavily on the most-advanced aspects. I'm not sure there was any clear proposal whether OLE-embedded objects would be moved through ODF, although it is provided for in the specification and it is also supported by OpenOffice.org. There is also the difference between interoperating with ODF of your own origination and not that of others (a version of the spreadsheet formula situation) because the OLE binaries are necessarily introduced via ODF-tolerated extension. [Added 2008-08-06: Oddly enough, reliance on OLE embeddings can, in these cases, provide better predictability and more gradual degradation of fidelity in the absence of corresponding support in a different application configuration. This has to be balanced against the prospect of creating a covert channel for leakage of embedded data.] 5.5 Roundtable DiscussionsThe roundtables covered four topics and then some general discussion.

These discussions did not arrive at conclusions. The purpose was to air different approaches and concerns, giving us and the Microsoft team much to think about. The purpose was fulfilled. I also saw some points that may be worth expanding into separate web posts and discussions. 5.6 Meeting the PeopleIt was delightful to finally meet a large number of individuals that I knew of and all but two I had never met in person:

It was quite a day. 6. What Others Are SayingThe following posts provide the varied perspectives of blogging attendees and other observers:

2008-07-15European Independence and Open-Source Development

Dana Blankenhorn shows a little national-identity sensitivity in his 2008-07-14 ZDnet article, Europe seeks to brand open source quality. I noticed some time ago that there is a European movement that sees open-source development as a key to the emergence of a European software industry independent of U.S. domination. This is indeed a focus of Commission-funded activity in the European Union. It is also part of the Information Society vision of the European Commission. For whose benefit would we expect to see EU funding for Information and Communication Technologies (ICT)? It can be a bit startling, for those of us who are comfortably and carelessly American, to see Euro-centric homage given in reports of sponsored scientific and technical ventures, often involving government and industry partnerships (not unlike Japanese initiatives I have observed). It is an useful lesson to have us notice how much US-centric posturing is spewed into the world and how that lands in Europe and elsewhere. Look at our version of Olympic spirit, for one. Look at the Mars Phoenix site and see how the contribution of scientific groups of other nations is in the shadow of the acknowledgment of US institutions. (The Wikipedia coverage is superior in its featuring of all participants.) Along with this, there have been occasional descriptions of EU computer and technology projects that featured open-source development and the GPL as if that was a sufficient condition for excellence in the result. The oddest cases were announcements of open-source results for which the code, binary or source, was nowhere to be found. Apparently, the code was locked up in the chambers of the commercial partners in the government-sponsored work. On other occasions where code was in a public place, I found it to be un-installable, fragile, and unsupported by useful documentation. This might be typical of too-much academic-research work done on any continent, but it tended, for me, to tarnish the glitter of open-source magic. It is a bit far from the European Commission goal of commercialized technology enabled by open-source development and licensing. SQ-OSS Is DifferentYes, the focus on support for European technology capabilities is clear:

The difference is that the development of the quality-assessment tools is a genuine, well-conducted, and globally-visible open-source undertaking. There is a level of development maturity here that matches the best open-source projects I have found. Those of us in the US and Canada will be happy to see that the code, involving lots of Java plus JAR files from other open-source projects (with a tiny dusting of C++) is fully available with narratives in a widely-recognized dialect of English. The project has a public Wiki and the on-line SVN repository is annotated with guidance to the contents starting at the top. Even the MAKEFILE structure is annotated with descriptive narrative. Considering that the Alitheia (Greek for "truth") deliverable from the project is at an alpha-level of release (0.8.1), I find a great deal to be comfortable with in how this deliverable is developed. (If there is anything obviously lacking, it is test code used to confirm the builds and the units that go into it. I may not have looked closely enough, and this is certainly something anyone could contribute as part of learning to build, use, and adapt the package.) I find the project materials to be inviting and a contribution to open development. Also surprising is the use of the Free BSD license for that code originated as part of the project. The project is also careful to include the licenses for those incorporated elements that are under different licenses as well. It would be nice if other open-source efforts were conducted so deliberately with attention to the usability of materials by others. Comments: Post a Comment 2008-07-13Help ZoneAlarm Users Get Back on the Internet

Technorati Tags: interoperability, ZoneAlarm, Microsoft Update, DNS vulnerability, Internet access, Interoperability Forums Although this should have blown over by now, you might have some family, friends, and colleagues who were unable to access the Internet after last week's security updates from Microsoft. Apparently the problem is limited to ZoneAlarm users. They can't see this post and the available remedies because they can't get to the Internet unless they figure out to

The basic situation is covered in the Microsoft Security Bulletin under the FAQ section and this Known Issues article. The ZoneAlarm workaround and updates can be found in this announcement. Update: Joel Hruska has a great 2008-07-14 summary of the roll-out of the DNS vulnerability fix on Ars Technica. There is no indication of the disconnect with ZoneAlarm's producer, Check Point Software. Also, if you or others are using ZoneAlarm on Windows and haven't run Microsoft Update in a while, be sure to update ZoneAlarm first. Then use Microsoft Update to obtain the DNS vulnerability fix and other recent security updates. No longer using ZoneAlarm myself, I did not learn about this issue until it was raised by someone looking for information on an Interoperability Forum. This is an interesting interoperability case. The ZoneAlarm package is listed by US-CERT as not being vulnerable to the DNS vulnerability, but there was apparently a secondary dependency on the correction made to Windows itself. How to protect against disconnects of this kind is partly an interoperability issue, it seems to me. I wonder whether this is an example of the hazard discussed in Interoperability: No Code Need Apply? Comments: Post a Comment 2008-07-10Interoperability: What's the Self-Interest?

Technorati Tags: Microsoft, interoperability, free markets, regulatory requirements, self-interest, Buzz Out Loud I noticed some interesting speculations on whether Microsoft should provide interoperability and does it make sense or not. I see in that discussion some bafflement about what self-interest in interoperability could be. Looking at examples from my own experience, I leave open for discussion the specific question of interoperability as a self-interest of a dominant producer in our industry.

Why Interoperate?Over on the C|Net Forums Buzz Out Loud Lounge, a listener has started a thread on Microsoft Interoperability. The initial post starts out sounding like a tribute to laissez-faire (basically, hands-off of business) free-market economic policy:

I've taken these quotations out of context. The original post is more ambivalent, seeking further discussion. The three short replies are also equivocal. These observations stand out for me:

Looking over that discussion, it strikes me that the question is whether Microsoft, or any dominant producer, has a self-interest in interoperability, and what that might be. An Example of Community Self-InterestIn my work with the Open Document Management API (ODMA), it became clear why small producers of niche products would build ODMA-compliant products. Support for ODMA helped to legitimize the entry of little-known suppliers into the document-management marketplace. By supporting ODMA, a niche document-management vendor provided more credibility in their claims to support integration with well-known office applications, especially Microsoft Word, Corel WordPerfect, and others. It has been interesting to observe that over the past dozen years as new products arose in Eastern Europe, the Middle East, and Asia. I believe that ODMA-using organizations are also reassured that they can manage and, if necessary, substitute ODMA-supporting document-management packages and desktop software. The self-interest on the part of adopters, whether or not they have much market voice, is fairly clear. Finally, there was a clear self-interest on the part of the original contributors to the ODMA specification and its connection-manager software. The arrangement satisfied a need they all had and that none of them found profitable to address independently. The producers of smaller document-management systems and interested suppliers of desktop software were comfortable enough to contribute to a community-developed and -owned solution. (Later on, ownership of support turned up missing, however.) I think it is clear how interoperability with existing specifications can be important for new entrants in what may be a specialized market niche. Similar experiences have accompanied the adherence to TWAIN for integration of scanners and cameras, and ODBC (and its descendants) for integration with structured-data sources. There is a similar history and appeal for WebDAV, in many ways a more-substantial alternative to the arrangements supported by ODMA. What is the Self-Interest of Dominant Players?Although Microsoft was not a visible participant in ODMA development, Microsoft Word and PowerPoint (starting with Office 97 and at one point including the Office Binder) continue to support ODMA. Compatibility with these programs is an important benchmark for all ODMA-compliant document-management-system integrations. To the best of my knowledge, no Microsoft server product (e.g., SharePoint or Exchange server) has an ODMA integration. I find it intriguing that Microsoft participated in this arms-length way; I have to remind myself that the competition on the desktop was rather different in 1996. I believe that dominant players can have a self-interest in supporting interoperability arrangements. I don't think that competition law accounts for all of it. I also believe that dominant players can find a self-interest in initiating interoperability arrangements, whether or not based on licensing agreements. Here's the question I want to throw open:

Speculations? How about for customers? Is the self-interest in interoperability that clear? |

|||||

|

You are navigating Orcmid's Lair. |

template

created 2002-10-28-07:25 -0800 (pst)

by orcmid |

What I was looking for, and saw immediately, is the new compatibility-view button. This "broken page" button appeared on the first site I visited after installation of IE 8.0 beta 2.

What I was looking for, and saw immediately, is the new compatibility-view button. This "broken page" button appeared on the first site I visited after installation of IE 8.0 beta 2.